In the spirit of the season I've been looking at a large dataset of campaign donations, from 1980 to 2006. This data is free to the public from the FEC; I've parsed and made it available on my website.

One can form a bipartite graph of committees (such as the Underwater Basketweavers' Political Action Committee) and candidates (such as Abraham Lincoln). Individual donations are all filtered through committees (usually a candidate has one or several designated committees), so the organization-candidate graph is the best way to measure donations to specific candidates.

A surprising observation in our KDD paper was the "fortification effect". First, if one takes the number of unique edges added to the graph (that is, the number of interactions between orgs and candidates) and compares with the total weight of the graph (that is, the total $ donated), one finds super-linear behavior. That is, the more unique donor-candidate relationships, the higher the average check becomes. The power law exponent in the org-cand graph was 1.5. (This also holds for $ vs nonunique edges, or number of checks, with exponent 1.15).

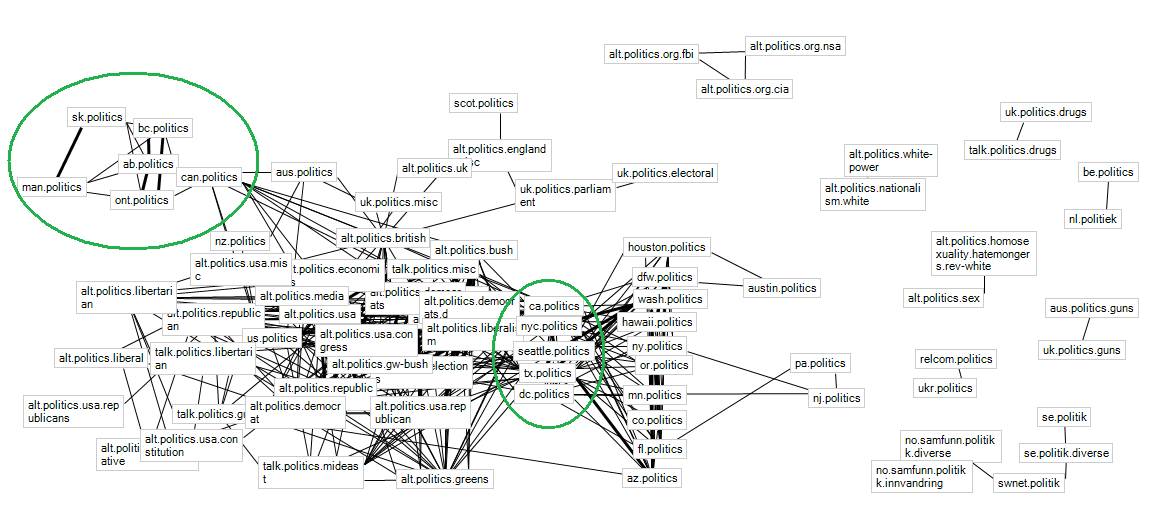

Even more interestingly, if one looks closer into the individual candidates, similar behavior emerges. The more donors a candidate has, the higher the average amount received from a donor becomes. The plot below shows money received from candidates vs. number of donor organizations.

Each green point represents one candidate, with the y-axis being the total money that candidate has received, and the x-coordinate being the number of donating organizations. The lower points represent the median for edge-intervals, with upper quartile error bars drawn. The red line is the power law fit-- here we have super-linear behavior between number of donors and the amount donated (with exponent 1.17). And again, the same is true for non-unique donations-- the more checks, the higher the average check.

Again, this does not include the 2008 data. I hear that Obama's donation patterns are different (lots of little checks, they tell me), but haven't confirmed this yet.